It was Ted’s first week on the job. He was brought in to replace another manager that had disappeared on “medical leave.” Although Ted suspected his predecessor had left or had been asked to leave for different reasons, he ignored it along with his initial instinct to flee.

It was Ted’s first week on the job. He was brought in to replace another manager that had disappeared on “medical leave.” Although Ted suspected his predecessor had left or had been asked to leave for different reasons, he ignored it along with his initial instinct to flee.

Ted’s new company was very serious about its data. But not serious as in designing a properly normalized data model. Nor serious as in creating a database that could be easily extended or manipulated when needed. Nor even serious as in, having life-changing large-dollar, or other highly-critical information stored within. The company was serious about being unwilling to lose even the smallest iota of data. Ever.

Fortunately, this lofty and rather costly goal had already been accomplished by Ted’s predecessor. The former manager, whom everyone simply referred to as “Beaker,” had put in place a fool-proof plan to prevent any kind of data loss.

As Ted Learned, years back, a system that Beaker was responsible for had crashed. Badly. The database and the database backup had both failed, and they had no choice but to restore a months-old copy that a developer just happened to have sitting around. The business was mad, IT staff were fired, and Beaker vowed, “Never Again!”

Shortly after the data-loss disaster had passed, Beaker held a meeting with his staff. “What would we do if the primary database server failed,” he rhetorically asked. “We’d need a backup server, of course!” Understanding the concern, his staff dutifully purchased a server and set up a real-time, replicating database.

“But,” Beaker then realized, “we will also need to backup the database in case data becomes corrupted and is replicated across both servers!" His staff then purchased a tape drive and attached it to the primary database server. And thus, the nightly backups began.

“This won’t work, either,” Beaker explained, “what if the backups are bad? We need to test the backups!" So his staff purchased another server, with another copy of SQL Server, and another tape drive. The backups could now take place physically independent of the primary server.

Up until this point, Ted was pleased. There was very little chance that data could ever be lost. There was replication, there was testing, there was a tape-retention policy. And had that been the end of the process, this would have been a lesson in best practices and not an article on WTF.

“What about the replicated server?” Beaker then pondered, “Shouldn’t it be backed up, too? What if the primary has a backup failure, then corrupt data enters the database, and then we’re unable to restore?” So his staff bought another tape drive and attached it to the replicating database server.

Fearing the worst, Beaker posited, "But what if the primary, the secondary, and the backup all have hardware failures, and we need to restore data? What then?" Unable to convince upper management to buy more hardware, Beaker instructed his staff to recommission a desktop as a server. And thus, the fourth database server was created.

By this point, Ted was starting to wonder what he'd gotten himself into. What sounded like a solid plan to prevent data-loss was becoming a FUD plan geared more at CYA than data-preservation. And that’s exactly when Ted learned the meat of the process.

Concerned about tape availability and the need for near-live restores, Beaker suggested, “When we backup the database, let’s export the backup file every night. We’ll retain one week’s worth of backups on the server and will therefore only have to bring the database offline if we use a really-old backup.” So his staff created a folder on the database server to hold every night’s complete backup of the database for one week. However, because the generated backup files always had a different name, they had no choice (so the staff was led to believe) but to backup the entire folder to tape.

But still, this wasn’t enough for Beaker. “Since we’re backing up the complete database on our primary server, we should definitely be doing the same on our replicating server!” So, once again, his staff dutifully created a folder on the secondary server and set the database to export backups to that folder as well. Every night the backup routine would back up the previous night's data as well as the data for the seven nights prior.

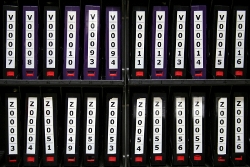

At this point, Ted sunk into his chair and sighed. He quickly calculated that, for any given one-week period, there would have no less than sixteen copies of the entire database for any given night on any of sixteen tapes. Over the space of one week, there would be no less than one hundred and twelve complete backups of the database stored on tape, sixteen complete backup copies stored on disk, and four live copies on the four main servers. That came to a grand total of 132 copies of the database with data no more than one week old.

Then, Ted did something foolish. Ted thought to ask “How many queries does the database average an hour?”

To which Ted's new staff replied, “About 60.”