Not too long ago, I was at a client site, working to understand and improve their development process. From a birds-eye view, their development organization was a lot like many other Corporate IT set-ups: they had a sizable portfolio of proprietary applications that were built for and used by different business groups. Some of these applications were “mission critical” and had highly formalized promotion and deployment processes, while others were ancillary and were hardly ever used. <shameless_plug>This, along with the medley of technologies and platforms, was why they sought our help in managing and automating their development processes with BuildMaster.</shameless_plug>

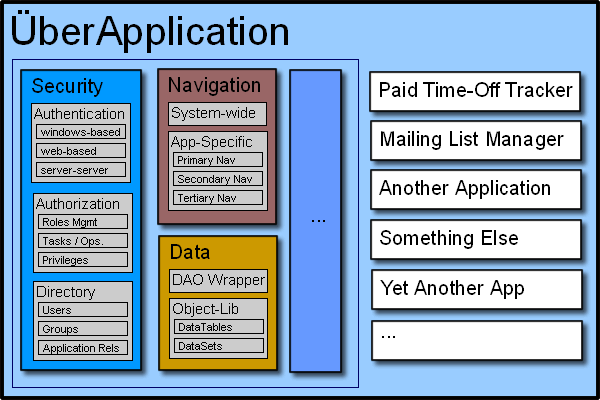

But as I dug deeper, I noticed that a significant portion of their applications weren’t applications at all. They were – for lack of a better word – “modules” that glommed together to form an ÜberApplication. Completely unrelated business functions – paid time-off tracking and customer mailing list management – lived side-by-side, sharing authorization principals, navigation controls, and even a “business workflow engine.”

Digging even further, I learned that most of these module-applications were derived from a “one-size-fits-all base application” of sorts. For example, the back-end paid time-off system was nothing more than a calendar with a custom UI for manipulating “events” (i.e. PTO requests). The front-end consisted of simply displaying these events to anxious employees who were gladly counting down the remaining days until a vacation from maintaining this amalgamation of a system. Other module-applications were “document managers”, “news posters”, or some other universal sounding name.

The whole thing may as well have been replaced by a pre-alpha version of Sharepoint or Google Docs. It was as if they had tried to build a skyscraper with Erector Sets. And not real Erector Sets, but some poorly-made knock-off.

The developers absolutely hated the ÜberApplication. It took longer to “customize” a template than to build an application from scratch, it was harder to test and deploy, and they never quite fit the business requirements The architect (who was the second successor since the original architect) despised it as well, and eventually convinced management to ditch it for constructing new applications from scratch.

Sadly, this was not the first time I’ve seen this set-up/architecture. At one of my first jobs, we had something comparable, except much less formalized and much more disorganized. And there are all the examples I’ve shared through The Daily WTF, but this recent experience got me thinking: how exactly do well-intentioned development organizations end up with horrible systems like this?

I See a Pattern Here

We human beings are quite remarkable at recognizing patterns. Take clouds, for example. A cloud dog looks nothing like a real dog, yet no matter how hard we try, once we see the dog in the cloud, that’s all we can see.

While this ability has clear evolutionary advantages, it’s often a disservice in today’s modern world. Pattern recognition yields many false positives, leading towards Gamblers’ Fallacy, prejudice, and can even extend to really poorly-written software.

Now, recognizing patterns at the micro level (i.e., code) is almost always a Good Thing. Code often does repeat itself, and consolidating repetitive code into subroutines tends to help throughout development and especially when it comes to maintenance. The real problem – and the one behind the aforementioned systems – is recognizing patterns at the macro/application level.

A Pattern of Failure

As completely different as Paid Time-Off tracking and Customer Mailing List management may seem on the outside, they do share quite a bit in common.

- Navigation of some kind that links between major and minor functions in the application

- Authentication and authorization for users of the application

- Pages/screens that view and/or edit a single record

- Lists that display multiple records based on some search criteria

- Et cetera, and so on

In fact, if we kept going, the list of similarities between applications would grow much larger than the list of differences. But that doesn’t mean that they’re basically the same thing. Just as our canine friends share an astonishing amount of DNA with us, it’s our differences that make us so unique. The same holds true in software, and forgetting that fact will inevitably lead towards the dreaded Inner-Platform effect.

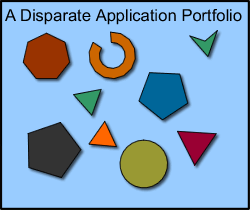

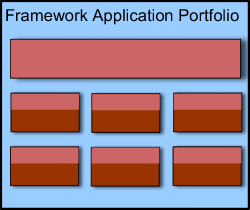

This is precisely how so many organizations end up with their own ÜberApplication. Consider, for example, that same portfolio of applications I described earlier. At one point, the applications evolved normally: i.e., they were built from scratch following development guidelines to suit the specific needs of the business client. And they looked something like this.

It’s hard to describe that portfolio as anything but chaotic, let alone accept that it’s actually how things should be. Let me repeat that last bit. A disparate application portfolio is a good thing. Proprietary software has a high strategic value to the organization, and building it in a manner that doesn’t meet the requirements largely defeats the purpose.

Still, it’s so easy — and so tempting – to forget that last part. Each new application built felt like reinventing the wheel, especially when they all had some variation of the same components: authentication, authorization, navigation, databases, etc. The rules for each of these applications were vastly different — a simple password required for one application, Active Directory group-based authorization for another — yet the designers had that overwhelming urge to abstract and “simplify” the process of creating new applications. This started at the requirements level by simply mandating that all applications share a universal set of requirements for certain things.

The uniformity was found in the UI: the layout must feature a header, a sidebar, and a footer, but the business customer can pick out the background color. It also was in the form layout: labels should be placed above form fields, and lists should always be sortable. And the database: there was to be a users table, groups table, roles table, etc.

Eventually, the applications started to blend together. Worse, the requirements conversation shifted from “how can we build software to meet these needs” to “how might we adapt the needs to meet our pre-determined requirements.”

Once the universal requirements had been defined, the next logical step was to abstract them into some sort of framework. After all, Duplication Is Evil, and it doesn’t make sense to re-implement the same User-Group-Principal-Role-Task-Operation security in each and every single application. Now, in addition to being uniformly developed, the applications were all dependent upon yet another in-house built codebase: the Global Application Framework. Granted, the GAF was little more than a wrapper on top of a framework (.NET), but somehow, it was perceived to be better.

The leap from here to the ÜberApplication wasn’t far. After all, application metadata was duplicated across several points: source control, server configuration, global navigation, and so on. Removing these points of duplication, along with consolidating all of the applications in one location, brought us to where we started.

Avoiding the Path to Inner Platform

The Road to WTF is almost always paved with good intentions, and there are few intentions more noble than making developers’ lives easier. Of course, given that developers’ lives are pretty easy as is, and there are a whole bunch of companies who build developer products, it’s pretty hard to improve, especially when your development department is just a cog in a large corporate machine. In fact, all too often, the opposite effect happens, and the “innovation” becomes one of the biggest obstacles.

Most of us developers embrace the Don’t Repeat Yourself/Duplication Is Evil principle, and we apply whenever we can to the software we create. But one of the deadliest pitfalls we can make is waving the wand of abstraction too quickly and too broadly, and we often forget that the consequence of unnecessary duplication is far less than the consequence of unnecessary consolidation.