With all the investments that have recently been made in statistical/brute-force methods for simulating machine intelligence, it seems that we may at least at last have achieved the ability to err like a human.

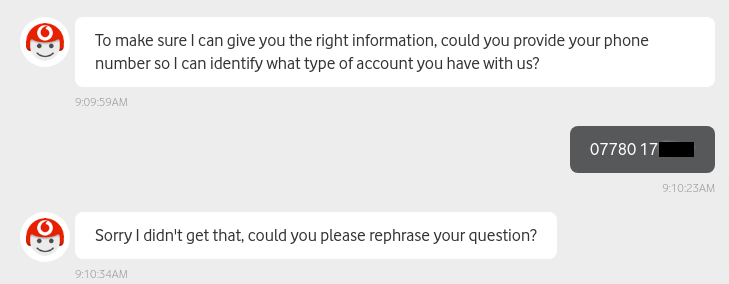

Patient Paul O. demonstrates that bots don't work well before they've had their coffee any more than humans do. "I spent 55 minutes on this chat, most of it with a human in the end, although they weren't a lot brighter than the bot."

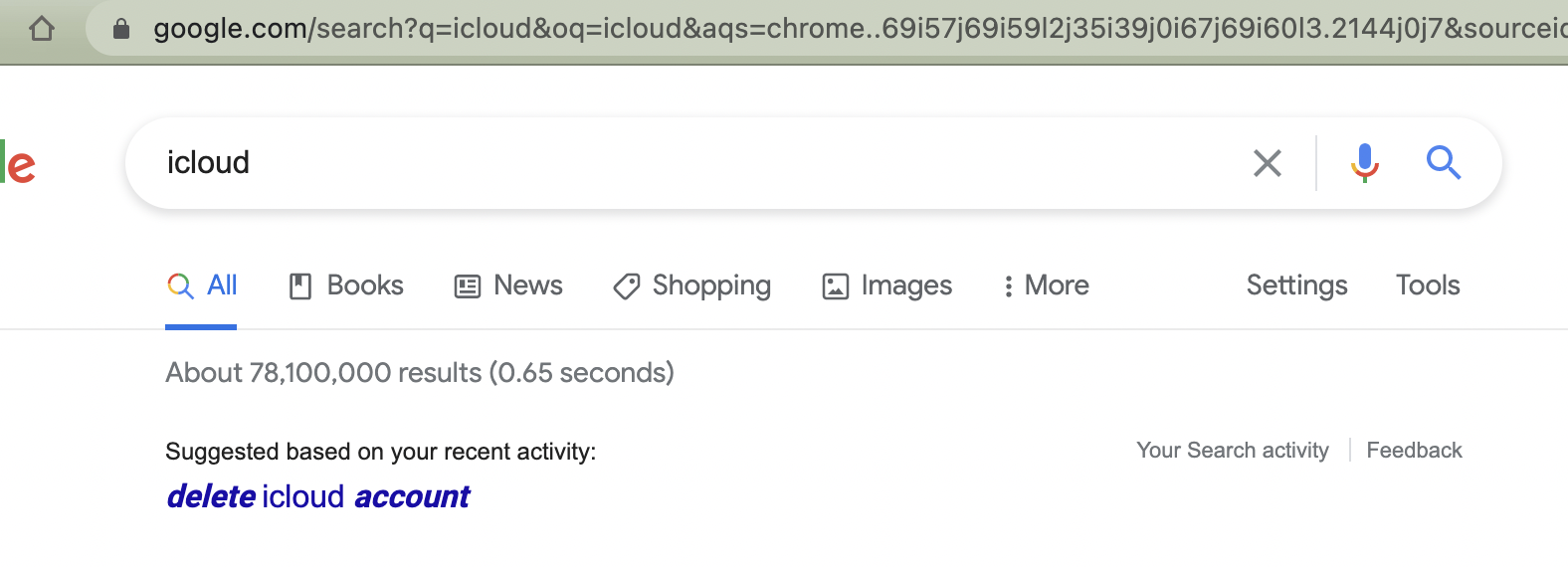

Brett N. discovers that Google's AI apparently has a viciously jealous streak: "I was just trying to log into my icloud account"

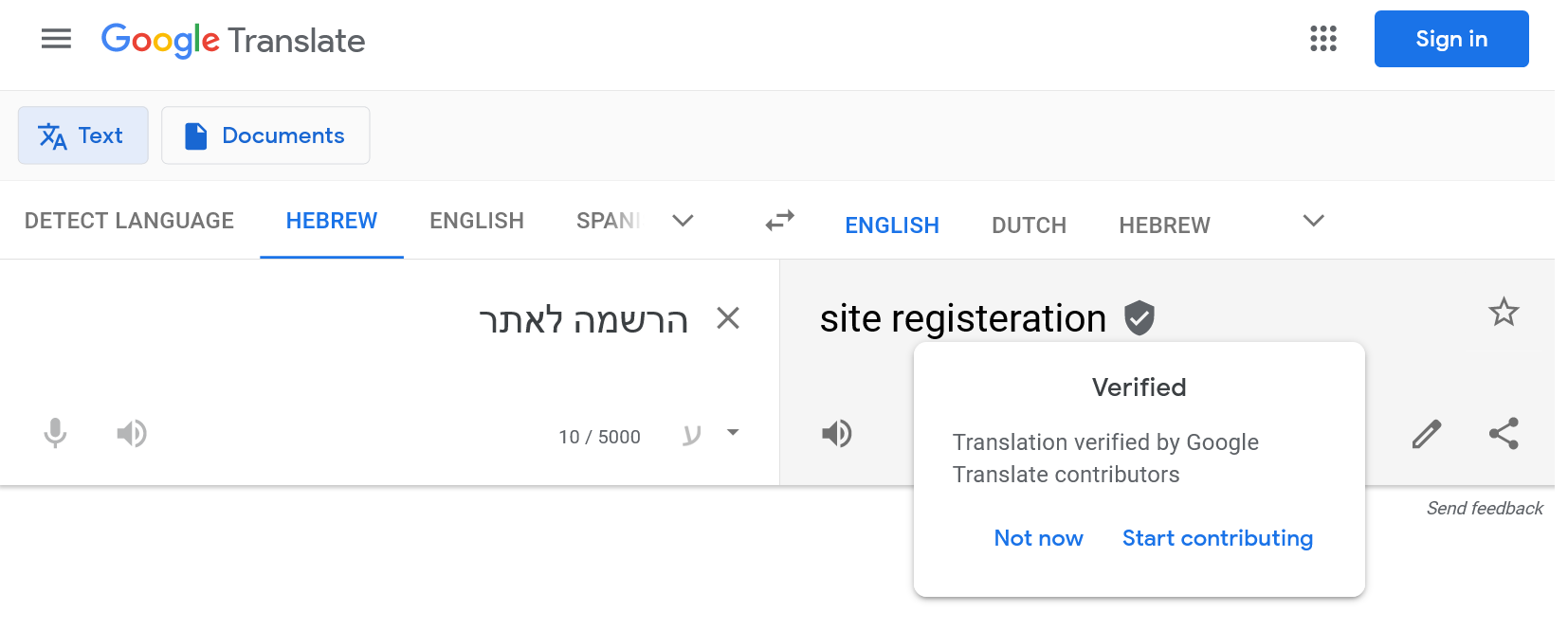

But despite stirrings of emotion, it's still struggling with its words sometimes, as Joe C. has learned. "Verified AND a shield of confidence!"

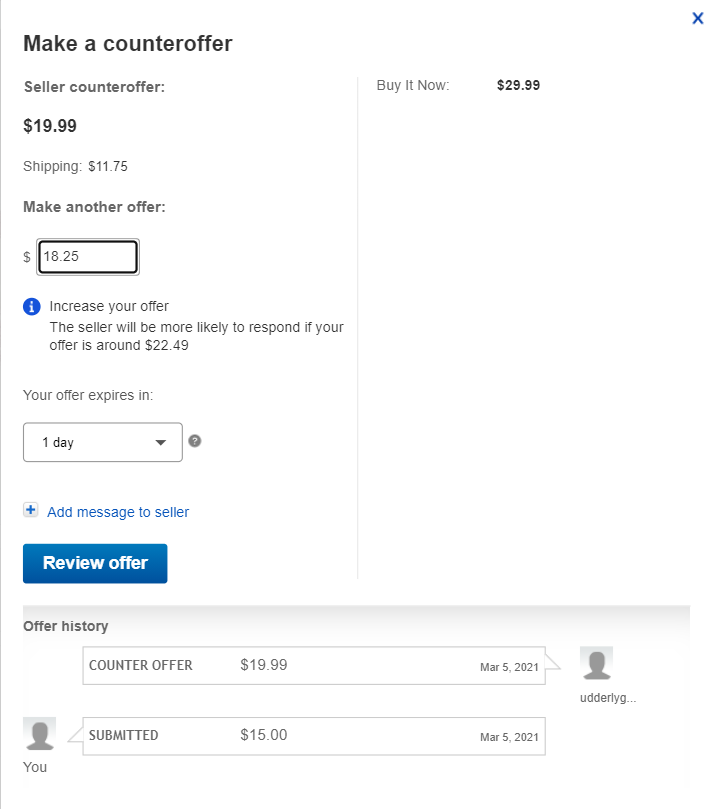

And an anonymous shopper shows us that

Ebay

is working hard on an effective theory of mind. Like

a toddler, just trying to figure out what makes the grownups

tick.

Says the shopper more mundanely "I'm guessing what happened here

is they made

the recommendation code reference the original asking price,

and didn't test with multiple counteroffers, but we'll never know."

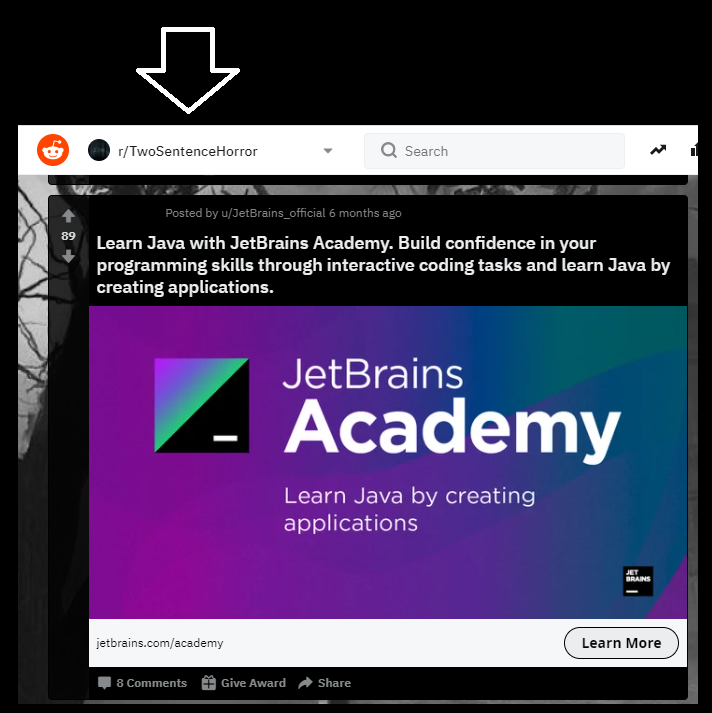

Finally, Redditor R3D3 has uncovered an adolescent algorithm going through its Goth phase. "Proof the algorithms have a sense of humor?"