Managing a long supply chain involves keeping thousands of moving parts in lock-step. From purchasing to order management, demand planning to operations, even the smallest hiccup in the chain can have massive impacts.

So when lowly IT drone Tony got a phone call from the VP of Global Purchasing, he knew there was one hell of a hiccup. “We can’t find records for a thousand product codes in the system!” the VP shrieked down the phone. “We can’t place orders if we don’t know what we need! We’re trying to plan our vendor projects for the next quarter and-”

Tony kept his cool. “Which system, specifically, lost your product codes?”

“All of them! All of the systems!”

The larger a company is, the less likely that its components behave as a single entity, working together for a common purpose. Each SBU operates according to its own rules. They are outright hostile to directives from the corporate office, and can’t possibly standardize their processes with the other SBUs. Because of this, Global Purchasing did not have a single, unified purchasing tool. There were at least six of them, possibly more.

The most recent entry into this pool was Purchize, a buzzword-based solution implemented in whatever technology you think might be the coolest; it “lives in the cloud” and promises to bring “social and mobile to your enterprise”. One of the others was Oracle’s solution. Another purchased product lived on a SQL2000 box that couldn’t be upgraded without breaking everything. There was one mainframe system, and then a little cluster of home-brew solutions, including one implemented in Access.

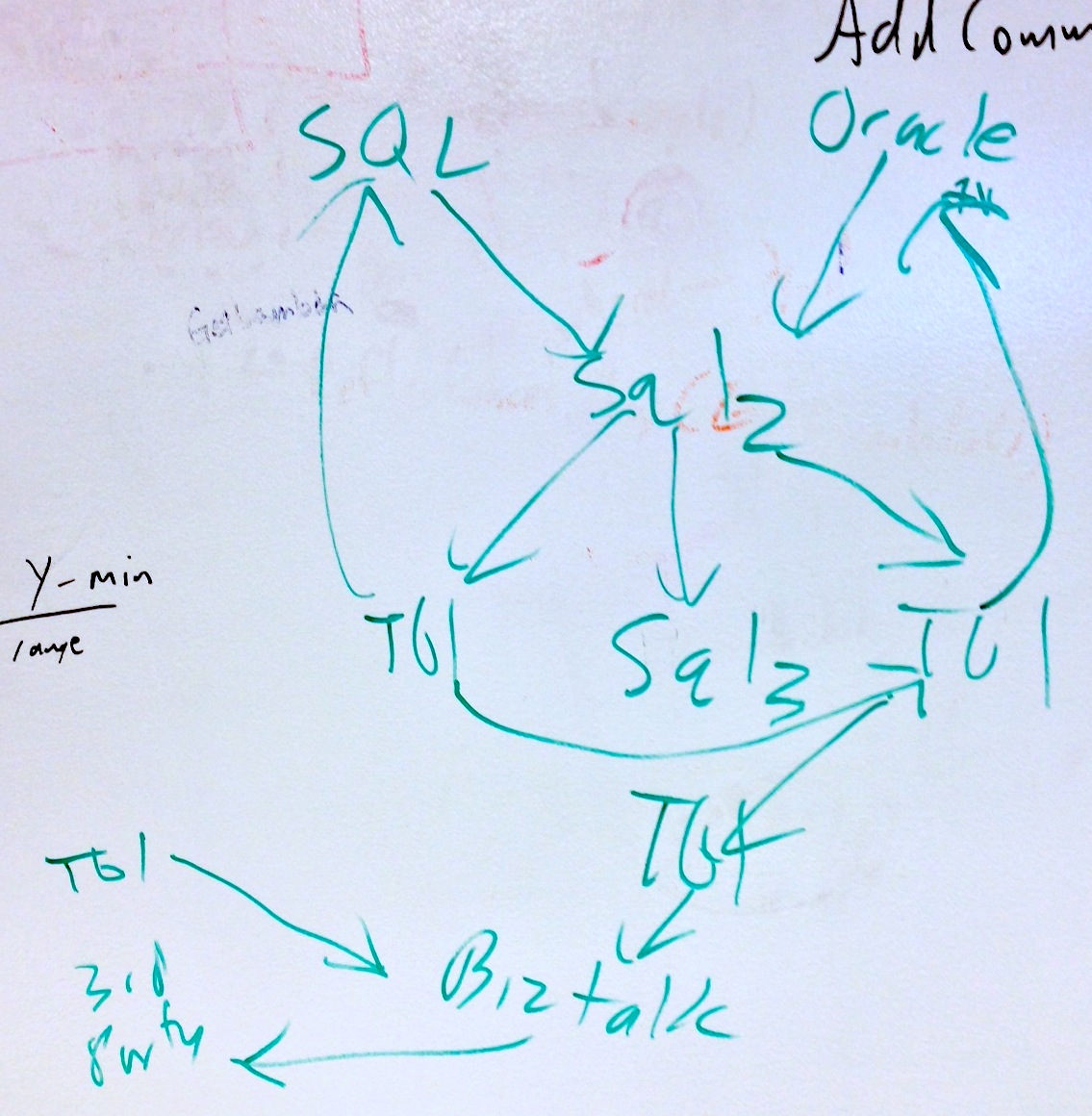

A long time ago, someone had decided to try and unify these disparate systems into a single reporting solution. This individual conceived and implemented the Purchasing Enterprise Examiner pretty much entirely by themselves. They created a database with a pile of staging tables, a pile of reporting tables, and a pile of stored procedures that moved data from staging to reporting once a day.

Users, especially managers, love reports, especially if those reports can be crammed into a dashboard. Over time, all of the individual businesses wired up their databases to the Examiner for its reports, which became the standard tool for reviewing purchasing KPIs, but this created a new problem: the data in the Examiner didn’t always exactly line up with the data in the underlying systems. Since it unified data across platforms, minor discrepencies showed up. So, all of these other systems stopped merely dumping data into the Examiner, and started extracting data too.

Since there wasn’t a working test environment, Tony had to “test” in production. He pushed some dummy records into the Examiner and watched to see what happened when he manually executed the daily job. Nothing bad happened. The records went through without issue, no data was lost. He traced the tendrils of data back to the other systems, and fonud nothing amiss. After a few hours of tracing records based on not-always-matching keys, Tony kicked off the daily job again, just to see what happened.

His dummy records were fine, but another dozen records vanished. Records which contained real production data.

Tony scrubbed through the server logs, and found a newer SQL2008 database was connecting to his ancient SQL2000 database using linked servers. No one knew who actually had set it up, but it didn’t take much poking to find that it was nothing more than a dumb copy of all of the data in the Examiner. Why?

So that BizTalk could more easily talk to it.

“Oh yeah,” the BizTalk admin said, “BizTalk doesn’t have a native connector for SQL2000 databases. So we use SQL2008 to proxy your data and pump records too and from Purchize.”

“Oh, that makes- wait, from Purchize?”

“When users edit the records, we get an XML file with their edits. We just feed that straight back into Oracle.”

“Just Oracle?” Tony asked.

“Yeah, why?”

Integration in the database layer often means trying to reconcile keys with no natural relationship. This requires some guesses, as well as deciding which system “wins” a conflict. In the case of the Purchasing Enterprise Examiner, it was the mainframe which automatically won conflicts.

Whenever a user updated a record in Purchize, the update was delivered to Oracle directly. Later, some custom PL/SQL pulled the current data from Oracle, munged it a bit, then dumped it into the Examiner. Purchize versioned the product code IDs, so these newly updated records didn’t exactly match the IDs in the mainframe. With no way to reconcile, the Examiner decided the mainframe was correct and nuked the updated records which came from Purchize through Oracle. Since all of those other systems, including the mainframe and Purchize, treated the Examiner as the system of record, they all eventually deleted those records too.

After a long day of investigating, Tony made some suggestions about how they could resolve this problem once and for all. Unfortunately, none of his suggestions were considered valid. Making any changes to how the Examiner merged data was considered a “High Risk” change- too many systems dependend on it. Changing how the various applications pulled or pushed data around would be too expensive- each application would have to change. Even worse, since many of these feeds crossed SBU boundaries, no one was certain who was actually responsible for making those changes.

Any technical change would take weeks of design, implementation and testing. Since this problem needed to be solved now, the VP of Global Purchasing handled this Gordian knot with his own Alexandrian solution. He ordered the feed back from Purchize turned off, and told the users that they simply had to keep manually enter their changes in both Purchize and Oracle.